Last Thursday we hosted our most recent Mayfield CXO Insight Call: “Practical Insights from the BCG Team on Real AI Engagements and Deployments,” where Navin Chaddha, Managing Partner at Mayfield and the BCG team, including Akash Bhatia and Matthew Kropp, shared a little bit about AI in practice – including real-world experience they’ve gained from working on actual AI projects.

Here’s some of the key learnings:

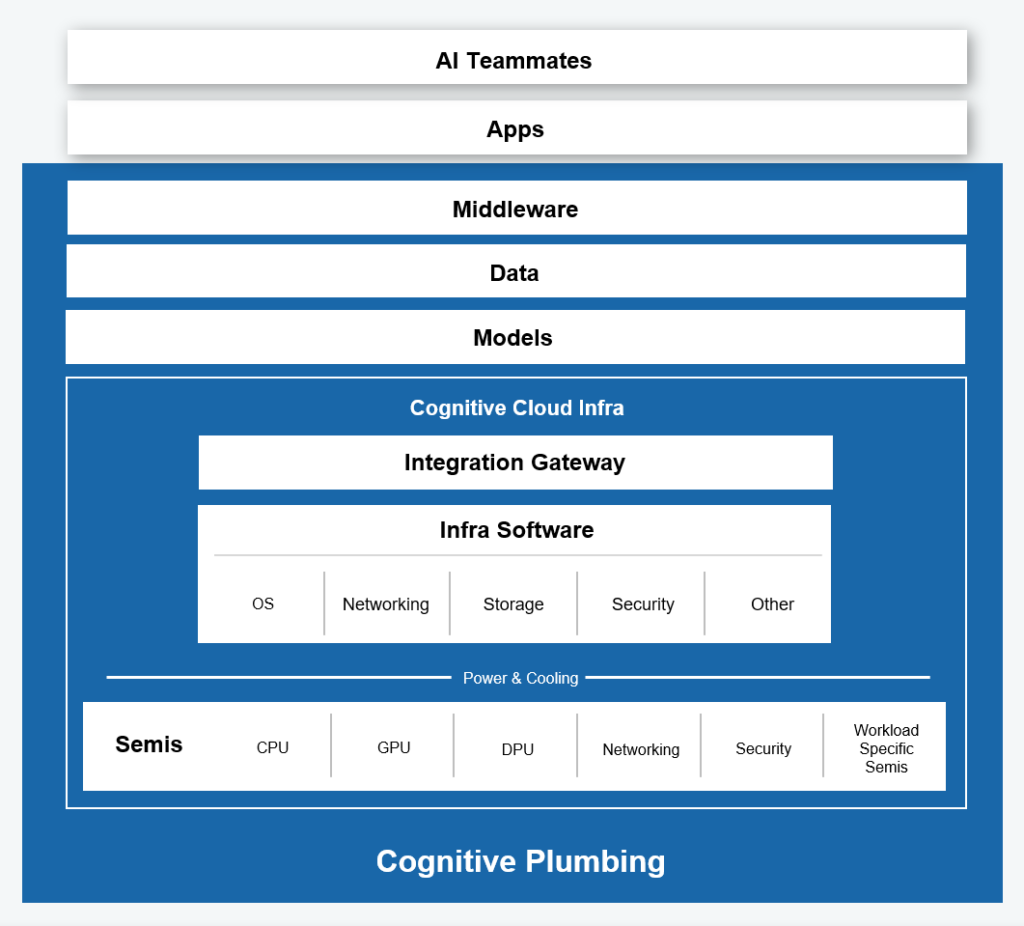

It’s been about 2 years since ChatGPT broke onto the scene and changed the AI landscape. Since that time, the conversation has evolved tremendously, and Mayfield has developed its own thesis on how we see the space. In the past, we’ve seen similar mega-trends including Infrastructure-as-a-Service, followed by PaaS and SaaS. AI will be a continuation of these waves, and we coined the term: “Cognition-as-a-Service,” which is our interpretation of how GenAI will be both delivered and consumed. Opportunities will exist up and down this entire stack.

The bottom four layers we call cognitive plumbing – where you have cloud infrastructure models acting as the new OS. If you have models and infrastructure, you need data, and then middleware and tools in order to build applications.

The next level up is intelligent applications, which we’ve traditionally referred to as SaaS applications – but what we’re most excited about is the very top – the layer that we’re calling AI teammates. We see these teammates as being digital companions that work alongside humans collaboratively. They’ll take us into a new era of collaborative intelligence by automating tasks, accelerating productivity and augmenting human capability.

We’ve put together some bottom-up research to support our emphasis on teammates. Instead of considering the TAM of enterprise software globally (which is around $650B), we believe that the opportunity for AI teammates will be in job replacement – specifically in areas where humans don’t want to do the work, can’t do the work, or there are simply not enough people to do the work. The total knowledge worker market, including salaries, compensation and other overhead is around $30T worldwide. Our belief is that over the next 7-8 years, 20% of it will get filled by AI teammates. So, we see this as a $6T opportunity – 2x of global IT spend today (excluding telecom services and devices). So, we see this as a truly massive opportunity and new frontier – absolutely.

How did Mayfield come to the $30T market opportunity for AI teammates?

The framework we used is based around the global spend on knowledge workers ($30T today globally) and what roles are open. Based on market research around customer support, sales engineers, developers, product managers, finance, and HR – we estimate that 20% of that spend will move to AI teammates. We have validated this with the Bureau of Labor Statistics and some of that data is available. In short: this spend is coming out of the OPEX budget – this won’t be software spend.

Is the term “digital companions” anthropomorphizing the technology as opposed to thinking about all this change at the business process or business strategy level? Improving processes is extremely rich in itself.

Executives today are definitely thinking a lot about how to reshape processes, how to think about work, how to think about employees doing that work, and ultimately, how that work could be redesigned. We’re not in the world of widespread AI companions today, but there are still many opportunities available across the AI landscape. Today’s market places a large emphasis on process automation – and once we see significant productivity gains there, companies will start thinking about what else they can do.

So, you’re seeing a lot of startups doing RPA 2.0 for things like accounts receivable reconciliation, settlements, etc. and there are also basic assistants who can detect, triage, and then take action by notifying a human in areas like security, sales, or engineering. These markets aren’t necessarily massive today, but the demand for these tools is still on the cutting edge, so we’re likely to see more widespread adoption over the next several years.

How will these changes impact the current SaaS market?

It will be disrupted. Enterprise software used to be a license-based business, with perpetual licenses, but over time it moved to a subscription model where you pay per employee per month (but people collect money upfront). The beauty of where AI is going is that it opens up an opportunity for pay as you go – and only paying for these tools as you use them. We see AI as transitioning the market to a consumption-based model. As a result, it will be very difficult for existing SaaS companies to pivot. New companies won’t position themselves as software, they’ll present themselves as replacing labor – and if the labor isn’t used, you won’t pay for it.

VCs today need to identify high ROI opportunities. Andrew Yang had a thesis that app revenue has to then float down to IT revenue, therefore app revenue is liable to be bigger. Does that fairly represent the likely share of profit, given normal profit maximization or competitive dynamics will take place? Or will those dynamics not happen in this case?

We’ve always grown up in this world around infrastructure and if we think about an application, there’s a database, there’s some middleware, and there’s a UI on top of it, and collectively we call it an app. We’ve been doing that forever. But if you think about the future where you just talk to a computer and it does something for you – is that an app? This will still need all the layers of the stack to work properly, so it’s not necessarily clear how to parse out what is an app and what is infrastructure.

It will be interesting to see how pricing models start to evolve, however, because Salesforce announced their agent force yesterday where they’re moving to a price per conversation. Will we move to a world where agents are paid like a person on an hourly basis? You could accrue a significant amount of profit to that agent, or that app, because it’s serving the place of a human – therefore the anchor price we’re comparing it to is the human hourly rate. Software has always been interesting since the marginal cost is basically zero and software companies are able to maintain a significant share of the profit. This won’t collapse down to marginal costs but we’ll see.

GenAI has been a huge and growing business for the team – going from zero to a very sizable portion of revenues over the last year. BCG works with clients both around their AI and GenAI strategy – but also gets very hands-on, helping clients build out entire solutions.

Recently, BCG completed a benchmarking survey where they interviewed over a thousand CXOs across ~3,500 companies, and they came back with some pretty interesting insights. Today, only around 25% of companies are successfully using AI to generate value, but of those companies, the actual business value is fairly significant – it maths out to an average of 3-4% in both cost savings and revenue generation, today, in 2024. The profile of these companies is no surprise to anyone: they represent more mature, digitally-oriented players, many of which are in financial services. In around four years, BCG projects this rising to ~8% for both metrics.

One thing to consider though, is that today there’s a huge emphasis on cost and cost savings. And while cost savings are appealing – forward-thinking companies are going to be the most excited about new lines of revenue. It’s important not to think about AI as merely being a cost-savings operation – and begin to imagine how to improve the top-line while utilizing these tools.

Insurance is one of the industries leaning the hardest into the AI revolution today, attempting to apply AI across a wide variety of areas – one of which is underwriting. By design, underwriting involves a lot of documents, interpretation and generation of language. Initially, BCG assumed the focus would be on costs – underwriting the same policies with fewer underwriters. However, the program ended up focusing on automating the front end – gathering information, summarizing, and distilling down a bunch of different documents. The automation helped remove about 80% of that effort. But the result wasn’t that fewer underwriters were needed…the result was that underwriters could quote a policy in a few hours instead of a week. The win rate went up because they were issuing policy quotes much faster than their competitors. Even better – they were previously unable to write policies for all of the requests they were getting, and now they could. So in the end, for them, it wasn’t about cutting costs, it was about generating more revenue and driving up the business.

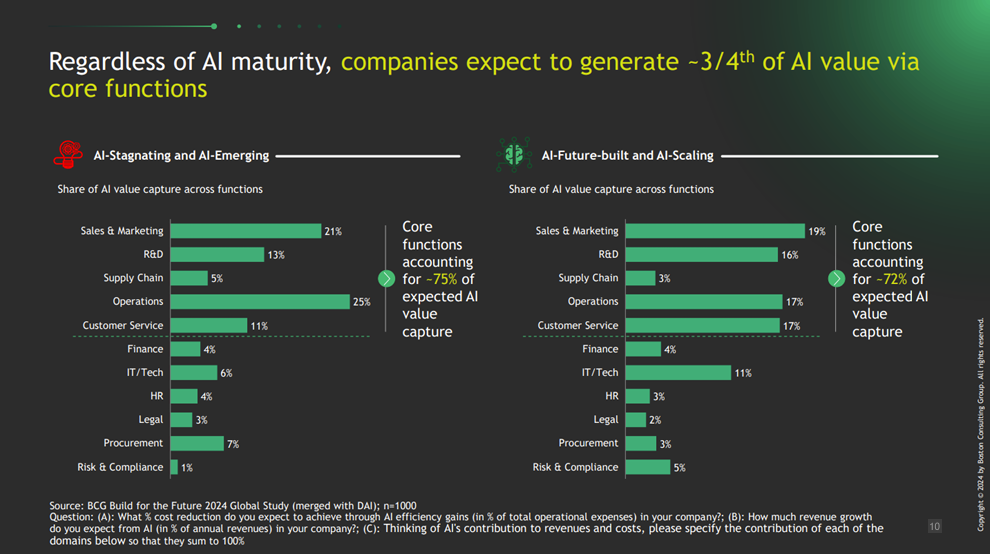

Maybe that’s a good segue into some of this. Where are we seeing some of the value being generated? Today, operations, sales and marketing, R&D, customer service, and supply chain constitute about 75% of the total value right now. So this isn’t just a benefit to the support functions – it’s actually in the core. And you’ll see this across a wide variety of industries – of course, if you’re a software company, you may see it more in X vs. Y, but it’s a good rule of thumb.

So where is it best to focus your effort? Think about where value is going to come from – it’s not going to be in risk and compliance. Many companies are seeing great success using AI in use cases as simple as deploying copilots for basic things like development or copywriting. If it’s in the core of the business, it’s still going to make an impact.

There are three complementary strategic plays to maximize the value potential of AI, which must be grounded in strategy and underpinned by investments:

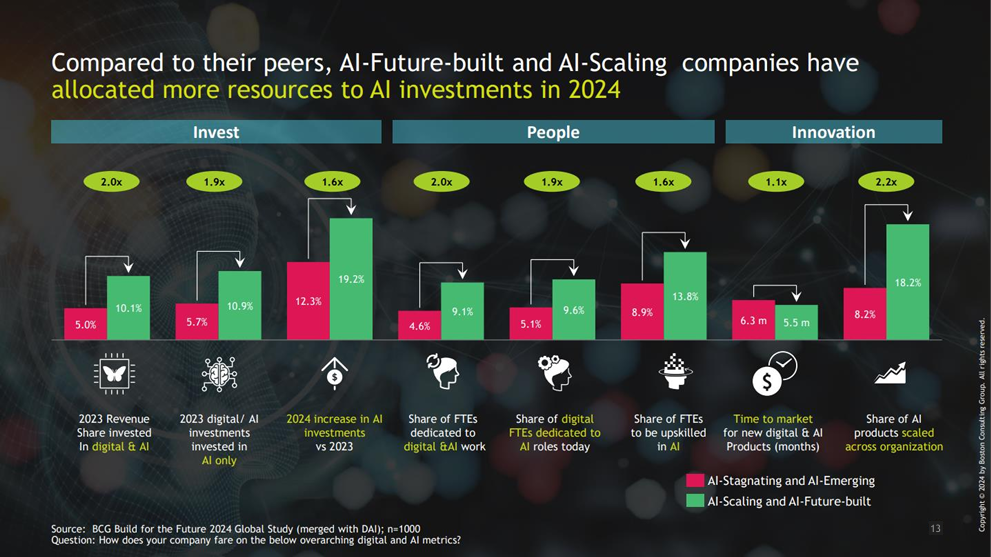

Mature companies have more breathing room for investment into innovation – they’re investing more revenue into this stuff, they’re putting more people through training, and they’re getting a higher rate of success as a result. Many business leaders are asking: how much should we be spending on it? Should we take it out of existing IT budgets?

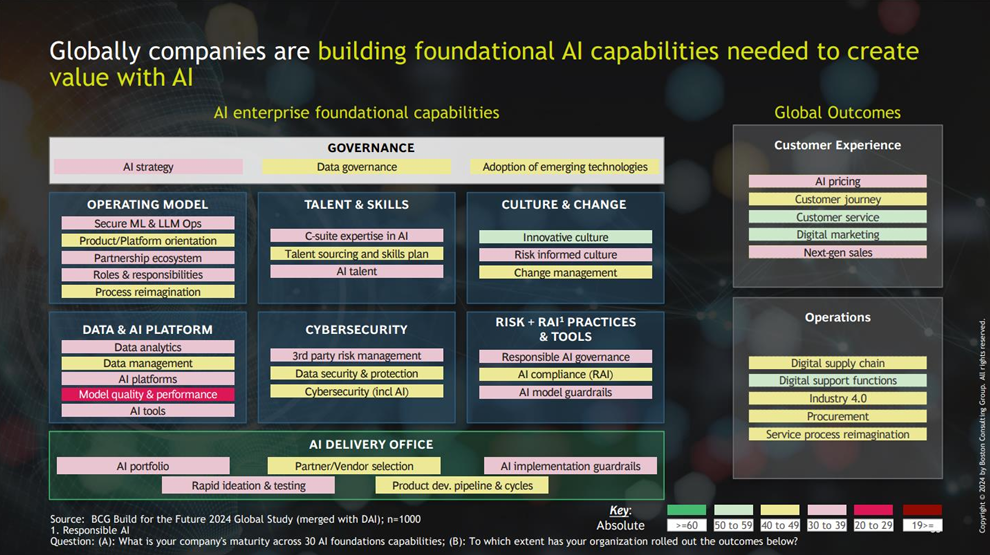

First, we need to ask where global companies are at today? It’s pretty clear based on a recent BCG survey that organizations are still in the early innings. There’s not a lot of green on the chart above, and where there is, it’s in the low hanging fruits such as innovation, customer service, digital marketing, etc. Everything else is a bit more yellow and red. For these other areas to grain traction and success, the biggest obstacle will be the change management piece.

BCG has been working with a large global bank who would score fairly high on the survey above. They launched over 20 pilots last year in an effort to use GenAI to drive transformation. Part of this was setting an ambitious goal in terms of cost savings and revenue, and then cascaded that down into the different business units and functions as targets. That way, everything is baked into their budget planning, as well as the incentives and goals for their different business unit leaders.

A big part of the effort has been starting at a high level and saying: where’s the opportunity. They identified nine big areas with high value in which they intend to put in significant investment. In some cases, this is on the order of 20-50 million dollars per initiative. But beyond technology, companies need to figure out the process:

The project then becomes building out the suite of tools and driving internal adoption so that the employees in that part of the business are actually using them, and changing the way they work. This is at least a three year journey, it doesn’t happen overnight.

What’s actually going into production today and what’s causing things to not actually take off? What percentage of projects are actually getting deployed into production and why are the projects that are failing to be deployed, failing?

Today, there are only a small number of GenAI projects in production. Fewer than 20% of these projects are in production today, but that is changing. It’s important to de-average the answer. If you look at the software companies – all of them have employed GenAI to some degree (in marketing, in engineering, in customer support, etc.). So, the digitally-forward companies have things in production today, but if you look at other sectors, it’s definitely slower.

GenAI and its abilities are all about the order of words, stochastic mimicry so to speak. Will it have a greater impact on industries that care a lot about the order of words (e.g. law contracts)?

BCG hasn’t necessarily been evaluating industries with that lens in mind, but there perhaps could be a correlation. If you look at software engineering, where there’s code being generated – you could consider that text generation. If you look at marketing, or customer support, some of these things are very text heavy – and you’re definitely seeing early adoption in these areas.

If you think about the phrase “order of words” – it implies that GenAI is just really good at constructing human-sounding language. But – there are a couple of levels that should be considered on top of that. These models are becoming better and better at understanding. So not only can they write something that sounds like a human writing or speaking, they have an embedded understanding of concepts – which is very powerful. So, if we use that legal example – you want to be able to construct a certain clause within a legal contract, but it’s very useful for a model to actually understand the underlying principles of law. It enables you to use it far more effectively.

Finally, these models are also getting better and better at logic and reason. If anyone has had a moment to check out the new OpenAI release – their new class of model, GPT-01 “Strawberry,” can take your question, go through an iterative process of reasoning, and generate very complex outputs that previous models were unable to achieve. We’re going to continue seeing this step up of the ability of models to reason, which will have a real business impact on software development, product development, strategic development, etc.

In short: models are better than just being stochastic parrots, but some of this evolution is going to take time.

What partners should enterprises lock in now? There’s definitely a choice between all the different hyperscalers, but who else is fundamental to the tech stack? What parts should be bought, built, or rented?

There are three layers worth paying attention to:

Most companies have already chosen a hyperscaler, or have some kind of multi-cloud strategy since all three of the major players are quite capable. Often, this is just a question of where a company’s data is already sitting.

The second level is positioning around platforms. Are you planning to be fully best of breed and have internal dev capabilities? Are you willing to assemble the whole suite of tools or are you more interested in solidifying on Amazon SageMaker or Microsoft’s AI platform to do some of the stitching together of the various components? It’s not a huge choice, but it relates more to what your stance is in terms of how you’re going to develop. Are you centralizing development within your IT group? Are you working with vendors?

Then there are the models: Are you talking about OpenAI? OpenAI delivered through Azure? Google Gemini? AWS Bedrock, which means using Anthropic. These are all very capable models. There are some differences and they’re all locked into an arms race at the moment, so someone will always be a little bit ahead, but you’re pretty much covered if you choose one of those three.

Some clients are also choosing to have an open source model such as LLAMA-3 that they’re operating within their own cloud. That may come down to that client wanting more control over their own data, or maybe they’re fine tuning because they have a specific application that they really need fine tuning for. Or, maybe it’s about cost. But these three layers are a good way to think about your options.

AI has been around for a long time now, with GenAI being the newest iteration. When BCG and others are saying “AI deployments” – what does that really mean? Traditional AI/ML has already been delivering value for 5+ years and there are some pretty scaled examples in the market today.

BCG’s survey results are AI – so the broader envelope – not just GenAI, although GenAI has certainly sucked the air out of the conversation and is dominating everyone’s focus. In two years we likely won’t be talking about GenAI anymore – we will just be talking about AI, because in most applications, it’s a combination.

If you bring in an LLM, it will enable new capabilities (e.g. language understanding, generation, etc.), but you can then combine that with predictive AI, which is far more efficient and frankly, for a lot of applications, the only way to go. You won’t do forecasting with an LLM, you’ll do it with a predictive model, but you might combine that with the language understanding of an LLM.

Finally, there’s a third element, which is good old fashioned automation – you could put RPA in that bucket. All three together are really necessary to obtain value. The conversation has been focused on GenAI because it’s a new and exciting thing, but we’re definitely thinking more broadly as this rolls out.

Any strategic or differentiated advantage that AI could provide, will ultimately be adopted industry-wide, and then you’re just keeping pace with the Joneses. How are companies differentiating with this stuff given all the common models that everyone’s building on?

Hyperscalers by definition are horizontal, so they’re going to have horizontal solutions for everyone. Building on top of that is fine. Then you look at the large apps: Salesforce has just announced Agentforce, SAP has Joule, and so on with Oracle, Adobe, etc. So if you think about it this way, everyone has AI baked into those solutions, and if everyone adopts them, then over time, by definition, we’ll all have the same processes and advantages.

But, if you look at AI from first principles, that might create a window for a competitive advantage in the world. You will need to take some of these first principles approaches to certain functions or processes and re-imagine them.

Another piece of this is going to be speed – how fast are you going to become AI-enabled? Even if it is just via the apps. Will that create a competitive advantage?

Many digital strategy teams are already in place at most large organizations – could this effort be integrated into one? Most organizations have already been working on digital transformation – so it already has its own track. Is it time for those teams to pivot to this new emerging capability, or should these teams operate independently?

Today, most companies are bringing everything underneath the umbrella of digital strategy. So everyone has their digital transformation initiatives, AI initiatives, and data science/big data teams. What we’re seeing companies do is pause, but not pause for long, in order to say: I have all these projects in the pipeline – how does this new technology impact those projects? For many, it won’t have any effect. Those projects keep going. Some projects get canceled because now they’re a bit irrelevant, but in many cases, projects start getting done in a different way. Imagine you had a project with a bunch of NLP as part of it – you should definitely be looking at a more efficient NLP algorithm, but LLMs may be able to bring a lot of value here because it won’t just be able to extract from text, it will be able to understand the text and have far more impactful end goals than prior NLP technology. All of this is getting brought together – predictive AI as well as GenAI.

Many global CIOs want to be able to bring the LLMs or embedding models to the data where it resides, and a large percentage of them, whether it’s financial services, insurance, etc. still have their data residing on-prem. What are some of the current solutions available for such a scenario? Barring Cohere, none of the model companies are willing to provide models for on-prem needs, or do basic things like indemnify the end libraries.

Open-source models can be run on-prem, but you’re not going to use all of the data that’s sitting on-prem for GenAI-based applications. I really encourage clients to start with:

What’s the application that I’m building?

What’s the data I need for that application?

Where does the data sit?

BCG was working with a tech company on a customer support chatbot. They started out by saying they have 30 data sources and need to put it all into the LLM in order to create a great customer support bot. But, once they dug a little deeper, BCG realized they didn’t need 30 data sources – they only needed one. There was one high quality data source that was already vetted and curated on their website. The moral is: don’t bother trying to bring all of your data to all of your problems. AI requires data, but you don’t necessarily need to bring it close to all data. That being said, Cohere is a good option for use cases where you need to.

Data governance is turning out to be an obstacle within a lot of large organizations. Who owns the data governance problem and how should organizational roles be defined around a Head of AI?

There are a couple dimensions to this. First, there’s infosec data residency, particularly for companies operating out of the EU. The EU has a lot of new AI regulations and there are some significant penalties attached to violating them (up to 7% of global revenue!). So being conscious of where data is living, where it’s moving, and what is in that data is quite important. But, fundamentally, it’s no different from any other digital project. There’s a lot of unjustified fear around data being used to train someone else’s LLM. If you have an enterprise agreement with any of the major contractors, they are legally bound not to train on your data. So a lot of those kinds of complaints feel like a bit of a red herring.

Furthermore, if you don’t have an enterprise ChatGPT-type solution, employees may very well be using the free versions and putting confidential information there instead. The way you mitigate that risk is bringing everyone into the enterprise version where they’re covered under the contract.

We’re a proliferation of governance platform companies offering governance platform products. How much adoption are you seeing of these products in the AI projects you’re implementing?

Not many yet. There’s a lot of discussion around governance platforms out there, and there is potential value, but BCG isn’t seeing clients adopt them yet. It may just be too early in the cycle.

Could there be a large gap between what we think is going to happen and what’s really happening? Take Waymo as an example: the cost of taking a Waymo is the same as Uber. The only difference is you’re not paying drivers, you’re paying Google. So, it’s hard to imagine these types of things are going to be a reality. We can barely get customer service agents to be autonomous without having someone in the background ensuring it avoids liability issues. Today, I think we’re still far away from agents being autonomous and I’ve been disappointed. I would love a replacement for many modern SaaS apps – but today I’m just not seeing one?

To some extent it’s understandable where you’re coming from. We’re at the beginning of the trough of disillusionment, but the good news is that once we’re through it, we should start to see impact. We need to make it through the next 1-3 years before that starts to happen. But I do believe there’s light at the end of the tunnel.