A number of LLM tools have emerged to assist software engineers, making them more productive, efficient, and effective. Starting with the launch of Github Copilot in 2022, which took natural language prompts and turned them into usable code, companies have tackled problems ranging from autocomplete and refactoring, to new code generation and automated testing.

Even just a year ago, many developers were hesitant to trust any sort of automated code generation tool, both from an accuracy and a security standpoint. Now, with Copilot’s user base rumored to have surpassed 1M developers, and with the tool’s 35% acceptance rate for code suggestions, the outlook has changed completely. Autocomplete tools like Copilot have become table stakes, and many developer workflows now rely on LLM-driven enhancements to save time and produce first drafts of programs, documentation, and testing libraries. In fact, by early 2023, Copilot was behind an average of 46% of a developers’ code across all programming languages. With the software developer market 25M+ globally, and with ML capabilities rapidly advancing to enable more nuanced and context-dependent integrations with code libraries, LLM-driven developer automation promises to be a massive category.

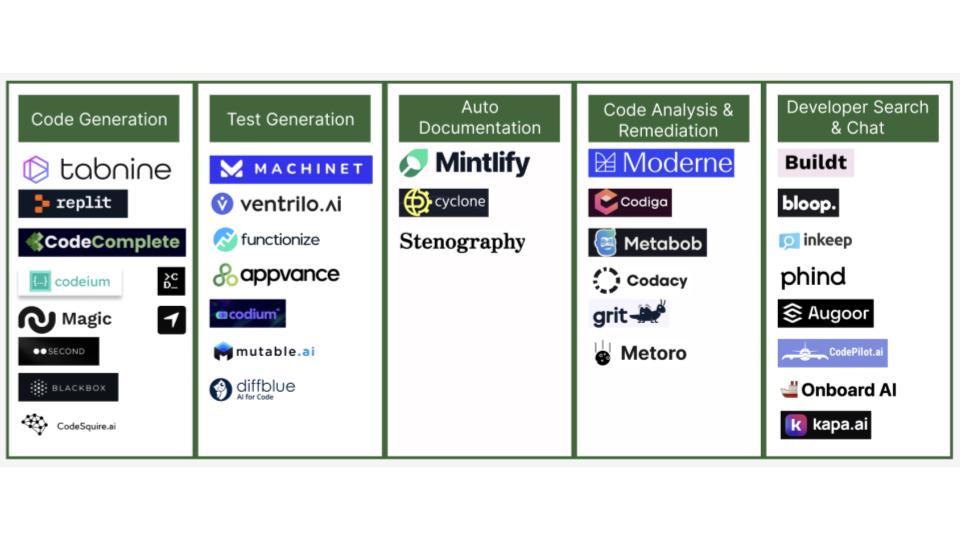

We have seen five main categories of focus within the larger umbrella: Code Generation, Test Generation, Auto-Documentation, Code Analysis & Remediation, and Code Search & Developer Chat.

1. Code Generation. LLM tools enhance developer workflows by providing code suggestions, autocompleting code in real-time, and even generating new code from scratch. They understand natural language queries and codebase context, so that they are able to provide relevant code snippets, functions, and even entire classes.

2. Test Generation. LLMs can auto-generate tests by interpreting natural language descriptions of desired test cases, and generating the corresponding code. Existing tools can provide unit tests, integration tests, and QA tests, accelerating a generally tedious process, and helping to ensure comprehensive test coverage.

3. Auto-Documentation. LLMs can auto generate documentation by analyzing code libraries, extracting relevant information and understanding code structure, then using this data to create clear and structured documentation in formats including HTML, Markdown, or PDFs. LLM-powered tools solve for the key pain points in today’s process, including breaking down documentation silos to synchronize information across sources within a codebase, generating user-friendly documentation that is well-structured, searchable, and easy to navigate, and automatically updating as code evolves, removing a huge source of manual maintenance effort many developers are faced with today.

4. Code Analysis & Remediation. LLM tools are able to analyze codebases, identify vulnerabilities, and automatically remediate issues in real-time. These tools leverage their understanding of natural language and programming best practices to provide insights, suggestions, and reports that help developers improve the quality, security, and maintainability of their code. Automating remediation and code migrations (e.g. Grit) enables software teams to greatly reduce their tech debt.

5. Code Search & Developer Chat. LLM-powered code search and developer chat applications enable greater collaboration and efficiency in navigating and querying complex codebases. Tools like Buildt, Bloop, and Onboard provide semantic search capabilities, while tools like Inkeep and Kapa provide real-time communication platforms that help developers find answers to their questions.

The elephant in the room for VCs and new founders in the space is the question of where, across all these categories, there is the most opportunity for new startups to play and succeed. Answering that question depends on a number of factors:

Suitability of tasks to automation

Certain types of developer tasks are better suited for automation than others, especially given current tech capabilities. Today, these tasks include repetitive, manual work (code-autocomplete, standard documentation), “jump-starting” code (initial code suggestions to help developers escape writer’s block and get into a flow state), and making quick fixes to existing code (such as adapting open-source code with iterative queries). Tools that focus on automating these repetitive tasks have served as effective initial wedges. However in the long-run, as tech capabilities advance, there is enormous opportunity to expand beyond more tedious use cases, and tap into more complex workflows, such as refactoring entire codebases and rewriting legacy applications. Successfully automating these tasks requires a deeper understanding of company context and nuanced requirements than what is available today, but these categories will likely grow rapidly with model breakthroughs.

Metrics that move the needle

The first step to widespread developer adoption of generative AI coding tools is building trust, and this is what early movers in this space have focused on proving. Copilot’s primary success metric out the gate was the percentage of their auto-generated code that was implemented in production by users.

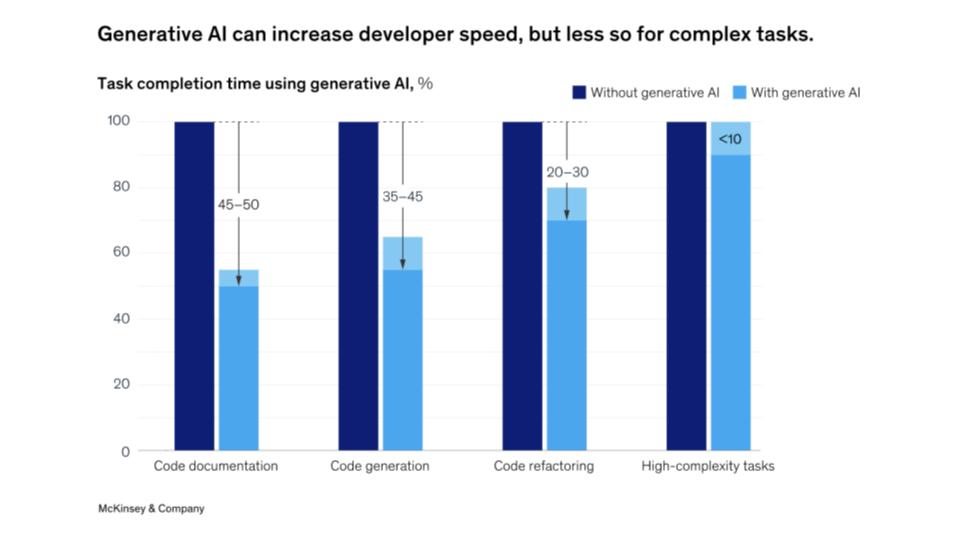

Moving forward, success of these tools and their ability to drive spend amongst users will depend on metrics that prove increased developer efficiency and productivity. Most directly, this can be measured by developer-hours saved by automating time-consuming tasks. Early research has found, for example, that with AI-powered tools, code documentation can be completed in half the time, new code generation in roughly half the time, and code refactoring in nearly two-thirds the time (graph below).

Beyond the direct impacts, spillover effects of these efficiency gains include increased developer satisfaction and a sense of fulfillment from work. Early research shows that developers using gen AI-based tools are more than twice as likely to report overall happiness, fulfillment, and a state of flow due to decreased “grunt work” and the ability to focus on more satisfying tasks. As this trend continues, companies can turn to these tools as a way to attract exceptional employees who like solving tough problems, and promote retention of talented employees.

Incumbent-dominated spaces

Incumbents have a major advantage when it comes to LLM-powered tools. Developer automation products from major cloud providers like Github and Amazon CodeWhisperer have already established a strong foothold, with new tools from existing companies, like Replit’s Ghostwriter and Sourcegraph’s Cody, showing early promise as well.

Currently, incumbents have both a data and distribution advantage that poses a major challenge for new startups. On the data front, tools like Copilot have a huge leg up because they can train on and learn from their existing corpus of code data from Github and other popular IDEs like VS Code. In addition to their massive code dataset, they can also utilize behavioral data from existing tasks and workflows to inform new product designs that will maximize user engagement. Distribution-wise, incumbents can hit the ground running by upselling new automation products to existing users, while new entrants have to start from square one and acquire each new user afresh. Companies like Github have a heightened advantage since many code automation products are directly implemented into existing workflows, making it seamless for users to expand into adjacent use cases.

Despite these advantages, there are clear opportunities for successful new entrants.

Firstly, some of the incumbent advantages, specifically on the data front, are likely to erode over time. While Copilot currently benefits from access to the best models through their relationship with OpenAI, other models will likely catch up over the coming months, making this a short-lived advantage.

There are also a number of strategies that startups can pursue to build their own moats. Some startups have offered a differentiated value proposition on top of standard automation capabilities like TabNine, an AI code assistant that has focused on privacy, compliance, and customer data protection. This could be an effective way to attract enterprise customers and governments who are hesitant to implement AI because of the privacy risks. Other startups have focused on targeting specific user groups and building highly customized workflows.

While both these strategies have their strengths, it’s unlikely that they will win out against the massive distribution advantage that incumbents have. Instead, what we see as the most compelling path to opportunity is for startups to go out on the margins, and innovate on new workflows that lie outside the scope of incumbent solutions. This means veering away from workflows like code-generation, where the much of the value will likely accrue to tools like Copilot that already have a strong lead, and instead coming up with app-based solutions for functions that are currently outsourced, like code maintenance and migration. By focusing outside of the incumbent strike zone, new entrants have much more opportunity to leverage AI to build solutions for tasks that have historically been too cumbersome to complete in-house. Introducing LLMs to these workflows and enabling end-to-end automation of these tasks can vastly improve both speed and economics, and push the envelope from “copilot” to “autopilot.”

Market Map

Note: Thank you to Anika Ayyar who co-wrote and co-researched this article. Anika is currently pursuing her MBA at HBS and spent the summer interning at Mayfield Fund.

Sources:

https://github.blog/2023-02-14-github-copilot-now-has-a-better-ai-model-and-new-capabilities